Tracking Apple ARKit and ARCore

Since the past year, ARKit has been changing the way iOS users see and interact with the world, though many users don’t even know it. So what is ARKit, and why do we think it’s so important for Making Ideas Happen on mobile?

ARKit -Augmented Reality Kit - is Apple’s SDK for creating augmented reality apps on the iOS platform. Released shortly after Apple’s keynote at WWDC 2017, ARKit is the software giant’s first major platform for building AR apps on its devices. Prior to its release, developers lacked an easy way to harness some of the basic functionality required for a truly immersive 3D experience.

With ARKit, the ability to harness the iPhone’s cameras and dynamic sensors are much easier, and because developers are working with a narrower range of devices and specifications - ARKit is compatible with 10th gen (iPhone 6s) devices onward - it has never been easier to develop augmented reality experiences on iOS. Apple followers large think the technology will be the basis of the much-hyped (though still unannounced) Apple ar glasses, but for now, its use is still limited to more recent iPhone iterations.

World Tracking with ARKit

ARKit is fundamentally a tool for integrating an iOS device's camera and motion capture features to build AR experiences in applications. At the heart of the technology is what Apple calls “world tracking.” World-tracking - technically speaking, visual-inertial odometry - is a technique that allows a system to keep track of where it is in space by placing and tracking reference points in an environment. You’ll have seen its setup step in just about any AR app on iOS: users are typically prompted to point their devices at a flat surface, after which hundreds of tiny reference nodes will briefly appear in the camera view. Once the ‘pins’ are dropped, sensors in the device use them as a frame of reference and can attach objects there (say, a Pokémon character or an IKEA couch) and then realistically scale them as the user moves around the room.

Until its most recent update, ARKit did this only with flat surfaces such as floors or tables, owing to the fact that the mechanics are slightly easier when the device is oriented parallel to those surfaces in space. ARKit 1.5 adds support for vertical planes (so things like walls or doors), which opens up a whole new range of possibilities for game and app developers. You can see a demo of how the device does this below:

Face Tracking with ARKit

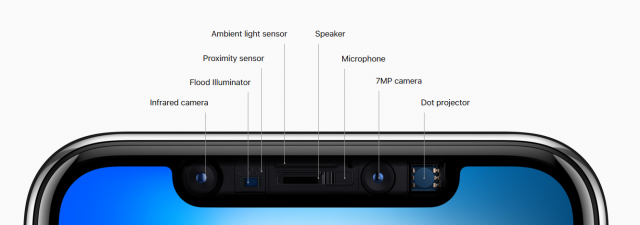

Another major breakthrough from Apple has been in the advanced facial tracking capabilities of the iPhone X, and ARKit gives developers several ways to harness the device’s state-of-the-art capabilities.

Facial recognition has been on Apple’s radar for years, and the company made a series of major acquisitions with the goal of building out the technology: PrimeSense for 3-D sensors, Perceptio (image recognition/machine learning), Metaio (core AR), and Faceshift (motion capture), all with the goal of bringing these technologies to future devices.

Extreme Tech

After years of development, things finally showed up in full force on the iPhone X

Tech from all of these companies made its way into components of what Apple calls its TrueDepth camera on iPhone X. Using the hardware built into the iPhone X, developers have access to four main sets of features for advanced facial tracking.

Face Anchor

.png?width=640&name=13f46b8e-d68d-4bf3-980d-dcd041e7c7d8%20(1).png)

Apple

Face Anchor works on a similar principle as ‘world tracking’ works for plane detection: it places a bunch of pins on the user's face in order to keep track of its spatial relationship to the phone. This is the core of face-based AR with the iPhone, allowing objects to scale and render realistically relative to the position of the end user's face.

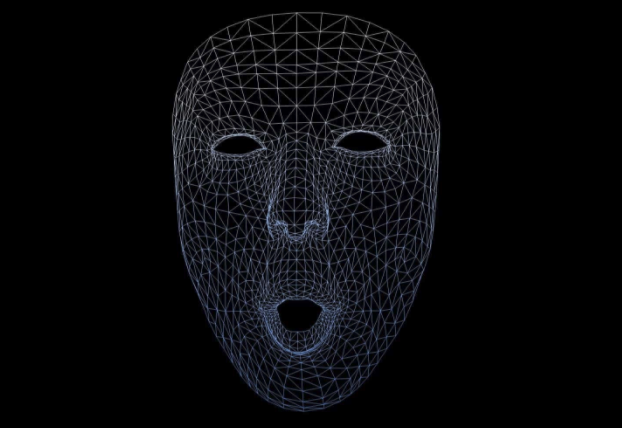

Face Mesh

MeasureKit

Face Mesh works on top of Face Anchor creates a detailed topography of the user's face and returns it as a 3D mesh. This map allows developers to ‘paint’ a user’s face with graphical overlays, and the level of technical detail in the mesh means that the end-user experience is much more realistic than was available on any previous devices.

Directional Light Estimating

Apple

Adding to the realism of face-based AR on the iPhone X, a feature called directional light estimating uses the lighting on the user's face to create a series of modifiers for the graphical overlays being placed. What this means is that ARKit can adjust things like shading automatically to match how the user’s face is lit in real life, and therefore the overlays can adjust on the fly to match the natural lighting of the scene. A great example of all three of these feature sets can be seen in Sephora’s Visual Artist feature, which allows users to try on cosmetic products from their phone:

Blend Shape Location

Finally, ARKit also provides a relatively simple mathematical way to describe expressions on faces, and then can translate them onto animated 3D objects. This is the feature that most directly underlies all those cute Animojis you can’t stop sending your friends and coworkers, but it will also have big implications for it also has big implications for companies like Brain Power, which seeks to use facial recognition software to help individuals on the autism spectrum to detect expressions (and the emotions that underlie them) on the faces of others.

"The Complex Made Simple"

ARKit has been available for less than a year, but its potential to massively scale the use of AR is already being realized across a huge range of use cases. Speaking to Good Morning America shortly ahead of ARKit’s release in September of last year, Apple CEO Tim Cook was unreserved in his description of his company’s most exciting technology:

"Later today hundreds of millions of customers will be able to use AR for the first time, so we're bringing it to the mainstream," Cook said. "We're taking the complex and making it simple. We want everybody to be able to use AR."

"This is a day to remember," he added, "This is a profound day."

Keep reading about AR:

- The SilverLogic's OmniPark Uses Augmented Reality to Find Your Parking Spot

- Inside the Future of Mixed Reality with Augmented Reality